I've stumbled across some settings that lets me convert mjpegstream to webRTC:

uv4l --driver mjpegstream --auto-video_nr --uri "http://192.168.0.26/webcam/?action=stream" --server-option=--port=8888 --server-option=--enable-webrtc --server-option=--enable-webrtc-video --server-option=--webrtc-enable-hw-codec

This is great in terms of bandwidth and seems to be okay on the CPU. It'd be interesting to see if this improves performance on a Pi Zero W.

The problem is that the uv4l WebRTC demo interface, and pretty much every other interface I've found, is aimed at videoconferencing. This doesn't need two-way control or anything fancy. I've tried cutting down the demo page (1200 lines!) but it frustrates me.

I wish webrtc simply worked in a <video> tag.

Anyhow, I'm putting it here in case anyone is interested. Have I mentioned how much I hate JS?

1 Like

I'm personally hurt that you didn't tag me in this. :laugh:

Have you seen this? You might be able to climb into /usr/share/uv4l/demos/facedetection/index.html and then remove all the stuff you don't need from that webpage.

I'm guessing you're over here, though.

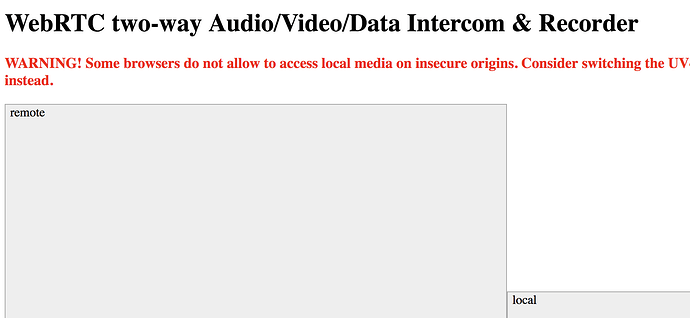

The one I'm using is "WebRTC two-way Audio/Video/Data Intercom & Recorder", embedded in the uv4l-server, looks like this. Similar to the facedetection one, and I may end up ripping out a ton of JS and doing it myself. But I'm surprised I haven't found a simple shim to do this.

Broken link.

I've played around with webrtc a little bit but it's been a while. From memory I thought chrome could handle it natively in a video tag of some kind but maybe it was a different element type.

What he said on the broken link, btw. Is it this one?

Aha, the link was just a screenshot showing the demo interface in v4l. I inlined the link so it wouldn't expand, but apparently that was a fail. Here we go:

@jneilliii I suspect you are thinking of webm in the video tag? The problem with webm/DASH/the apple thing is the latency. So the goals are lower bandwidth (than mjpeg), low latency (under 1sec), no huge CPU hit on the Pi, and less of a CPU hit on the client computer. No big deal

I only notice the client CPU hit when leaving 5 mjpeg streams open for hours on end.

Is webrtc re-encoding any better in performance than reencoding to h264 and using mp4 url in video tag?

Can, and it certainly has HW accelerator support. On the bright side that's built into the browser (so I'll try it); on the down side the latency may be worse.

I know with my rtmp stream re-encoder there is ~45 second delay to create the buffer for the stream, but that's because it's posting to rtmp servers re-encoded into flash. I'm getting the uv4l stuff installed on my zero (only stretch install right now) to look at it. But I did find the following on webrtc embedded in video. This of course it utilizing the local webcam and not a url to the server, but I would think you could accomplish it the same.

So after getting everything installed on the pi zero I was able to get the demo to connect to the re-encoded stream. The trick was pressing the call button at the bottom of the default page.

Pi Zero W running Raspbian Stretch Install Commands for reference:

curl http://www.linux-projects.org/listing/uv4l_repo/lpkey.asc | sudo apt-key add -

sudo nano /etc/apt/sources.list

add the line "deb http://www.linux-projects.org/listing/uv4l_repo/raspbian/stretch stretch main" to the end of the file and save.

sudo apt-get update

sudo apt-get install uv4l uv4l-raspicam uv4l-webrtc-armv6 uv4l-mjpegstream uv4l-server

uv4l --driver mjpegstream --auto-video_nr --uri "http://192.168.0.26/webcam/?action=stream" --server-option=--port=8888 --server-option=--enable-webrtc --server-option=--enable-webrtc-video --server-option=--webrtc-enable-hw-codec

Then head over to the address http://192.168.0.26:8888/ and press the WebRTC to get to the demo screenshot you posted and then at the bottom of the page press the call button which is just using the start() function in the head of the page. Looking at the source it is just using a video tag on this page.

1 Like

Gotcha, yeah, so it is a <video> tag but still requires a big pile of JS to run.

Quick tip to replace the "sudo nano" and "add the line" instructions:

echo "deb http://www.linux-projects.org/listing/uv4l_repo/raspbian/stretch stretch main" | sudo tee /etc/apt/sources.list.d/uv4l.list

Noting here because it seems like a decent place. Using the pi webcam, a stream (to /webcam/?action=stream consumes about 100mb per minute, an optimized stream (fps=2, 640x480) is about 20mb/minute.

My poor Mac Pro can't consume 5 streams in a web browser

I was thinking about your implementation here and was wondering if instead of re-encoding the mjpgstreamer stream, what if you used this to replace it instead. That would give you the benefit of providing mjpg stream, h264 streams, webrts streams, etc.

1 Like

agree- and I should be able to.

Or drop down the resolution by half (should be a quarter the bandwidth).

mjpegstream will never be efficient. it's basically a firehose. sure, the size changes the overall, but it's still really extreme- for instance, every frame is basically a keyframe.

Did you also consider gstreamer? That seems to be what astrobox ported to.

yeah, it's basically only middleware AFAICT- not meant for serving to a web client, more server-to-server.

I haven't looked into the code linked, but based on what I've read here about this being a potential alternative to MJPEGstream, that's more efficient both on the server and client, that's fantastic! On my laptop octoprint currently uses over a Gigabyte of RAM (in a background tab) and around 15-30% cpu. Anything that can reduce this resource usage is a huge improvement! I hope this project is successful!

2 Likes