So I tried testing this and it's a no go it seems. The MJPEG stream is still embedded in an RTSP wrapper, so you can't use it like I thought.

Hi all, my first post here  and I wanted the same thing: using Wyze cam in Octoprint.

and I wanted the same thing: using Wyze cam in Octoprint.

If you are still looking for this, I have written some instructions how to get this working, Dafang Hacks are required, but it works quite well, with streaming over http you will lose some quality, but if you have some spare cpu power available it can look quite fantastic. On a raspberry you will need to make consessions in terms of bit/frame rate.

This is done using vlc as ffserver was not an option for me.

Follow the instructions here.

Hi,

I was able to get the vlc option working for streaming (not screenshots) using the RTSP firmware from Wyze and I was able to get the ffserver and ffmpeg option working for both streaming and snapshots when working in a terminal window. The problem is trying to get ffserver and ffmpeg working outside of running them from terminal.

I set up a crontab for pi and get ffserver to run but not ffmpeg using successive @reboot commands

@reboot ffserver &

@reboot ffmpeg -rtsp_transport tcp -i 'rtsp://username:password@cameraip/live' http://127.0.0.1:8090/camera.ffm >/dev/null 2>&1

I then tried making a script that gets called by the crontab to just launch ffmpeg and then tried to have it launch both ffserver and ffmpeg. I have read a lot of posts about ffmpeg being launched from crontab and a script (.sh) and tried everything mentioned but no luck. For those in here that have gotten this to work, what is the trick to get it to launch at boot of your raspberry pi?

Thanks!

Hello everyone!

I am wondering if there has been any progress on this or if you can help me. I have tried both instructions from @SrgntBallistic and @atom6 and get stuck on both. When I try to 'ffserver &' I get

"[1] 12580

pi@octopi : ~ $ -bash: ffserver: command not found";

when I try the VLC approach, I run the the './http_stream.sh' and get the same problem. Blank line and nothing happens. I have updated the firmware on the Wyze cam and get a stream in VLC, I just can't get it set up to get running in Octopi. I'm fairly novice at programming things like this (meaning I follow instructions fairly well but couldn't make anything up on my own). Thanks in advance for any input!

I've been closely reading this topic, as I just got OctoPrint installed today on an RPi4 and I have a Wyze V2 ready to go for this! Has there been any updates to getting this optimized?

I have been playing around tonight to reduce the load of my Octoprint streaming from my Wyze on my Pi 3+ and have reduce the load by a lot.

Using the Official rtsp firmware from Wyze.

cvlc -R rtsp://<rtsp user>:<rtsp password>@<rtsp ip addr>/live --sout-x264-preset fast --sout='#transcode{acodec=none,vcodec=MJPG,vb=1000,fps=0.5}:standard{mux=mpjpeg,access=http{mime=multipart/x-mixed-replace; boundary=--7b3cc56e5f51db803f790dad720ed50a},dst=:8899/videostream.cgi}' --sout-keep

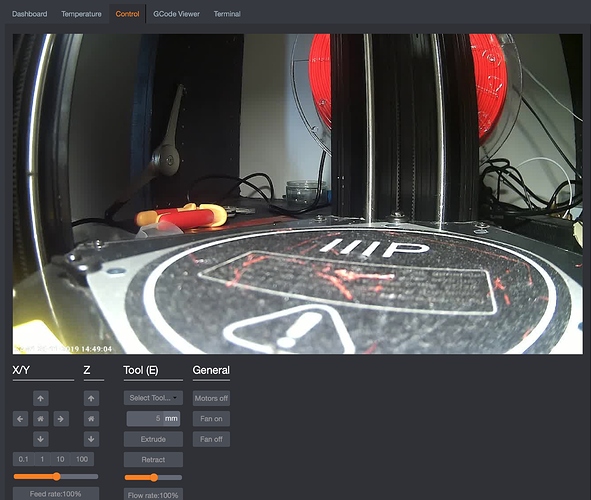

now in octoprint setup in the webcam tab, under the URL Stream I put in: http://<octoprint ip address>:8899/videostream.cgi

I made a Docker container for it. https://hub.docker.com/r/eroji/rtsp2mjpg

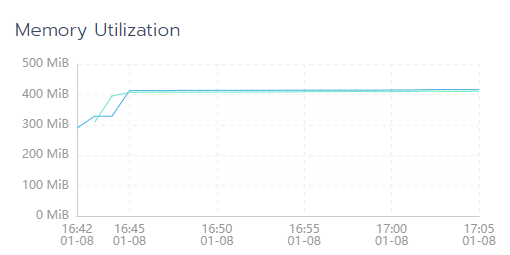

UPDATE: I updated the base image to alpine. Now the image is 21MB compressed and uses 100MB less memory when running. I noticed the original ubuntu image with its flavor of ffmpeg was leaking memory over time. The stream would still die after about a day of run time. Hopefully alpine one works better. Looks very steady and flat so far.

I updated the GitHub with docker-compose. You can pull it down yourself and run it as a service in docker.

Just run docker-compose up -d after cloning the repo. This will include an nginx proxy that listens on port 80. So you can get to the live stream at http://<ip>/live.mjpg and still snapshot at http://<ip>/still.jpg.

I played with the ffmpeg flags quite a bit and got it relatively stable. However, ever so often the ffmpeg process would still die with an error like this.

[rtsp @ 0x7ffbe78c3460] CSeq 11 expected, 0 received.

My ffmpeg command is currently:

/usr/bin/ffmpeg -hide_banner -loglevel info -rtsp_transport tcp -nostats -use_wallclock_as_timestamps 1 -i rtsp://user:pass@mycamera/live -async 1 -vsync 1 http://127.0.0.1:8090/feed.ffm

If anyone here is a ffmpeg expert, feel free to comment on how I can improve it to make it completely stable!

I've never used docker. This is meant to run on a Raspberry Pi? Could you point me in the direction of some stuff to get up to speed? Thanks

I might give this a try. Are you kicking it off in a bashrc file or manually running it?

If you use docker-compose, it will create a persistent instance of the docker containers. Now, I didn't test it on a Pi, so the base image may need to be changed to support ARM platform. Let me know if that's the case and I will update it to include an ARM alternative.

In theory you could use the webcamstreamer plugin to do this by modifying the advanced setup section and matching your ffmpeg command I suppose.

Either case, if you do that or try to run your docker image (once made ARM compatible) on an octopi instance you'd have to disable the webcamd service in order to release the lock on the video device. And if you're docker image is using port 80, not sure how that would play out since haproxy tunnels port 80 by default to octoprint's port 5000.

Looks like docker-compose.yaml file is missing in the repo...

Give me a few, I'll push it. Trying to test build the armv7 image and commit that as well. No idea how well it will work though as I never tried to make a image for Pi before. Even if it works, I doubt Pi 3b+ would be able to handle the load. Maybe a 4b could...

This is the one that webcam streamer uses, in case it helps...it would just be missing ffserver.

Everything is committed and I built and pushed the armhf image to Docker Hub as well. I tried it with default ffmpeg/ffserver flags and config and it's just way too heavy for Pi 3b+. Someone tuning will be required. I'll let others have at it. I also made a armhf version of docker-compose, but seeing as how the main container is already so taxing, I got rid of the nginx proxy.

So far, the best I can do with ffmpeg flags is the following. This is on a x64 system with enough resources. However, the ffmpeg process still dies occasionally.

/usr/bin/ffmpeg -hide_banner -loglevel info -rtsp_transport tcp -nostats -use_wallclock_as_timestamps 1 -i rtsp://user:pass@mycamera/live -async 1 -vsync 1 http://127.0.0.1:8090/feed.ffmInteresting, I looked at his Dockerfile and it does appear to be something I can base it off on. I'll give it a try.

PS: Building image on a pi3 is absurdly slow

The eroji/rtsp2mjpg:armhf image is now updated with ffmpeg/ffserver compiled with omax driver from source version 3.4.7, the last version with ffserver built in. I also updated all the related files, including docker-compile.yaml. I gave it a try running on my pi3b+ and it went a few more seconds further before ffmpeg went south. So feel free to tweak the ffmpeg flags until it can sustain the stream. You may also need to change the ffserver.conf, which in that case just create one in the local directory and mount it in by adding the mount in the docker-compose.

UPDATE: I think I found the root cause of ffmpeg dying. None of my other wifi devices do this...

4 bytes from 10.64.20.21: icmp_seq=54 ttl=63 time=1.29 ms

64 bytes from 10.64.20.21: icmp_seq=55 ttl=63 time=1.17 ms

64 bytes from 10.64.20.21: icmp_seq=56 ttl=63 time=321 ms

64 bytes from 10.64.20.21: icmp_seq=57 ttl=63 time=308 ms

64 bytes from 10.64.20.21: icmp_seq=58 ttl=63 time=1.15 ms

64 bytes from 10.64.20.21: icmp_seq=59 ttl=63 time=3.95 ms

64 bytes from 10.64.20.21: icmp_seq=60 ttl=63 time=63.3 ms

64 bytes from 10.64.20.21: icmp_seq=61 ttl=63 time=195 ms

64 bytes from 10.64.20.21: icmp_seq=62 ttl=63 time=5.48 ms

64 bytes from 10.64.20.21: icmp_seq=63 ttl=63 time=9.67 ms

64 bytes from 10.64.20.21: icmp_seq=64 ttl=63 time=1.25 ms

64 bytes from 10.64.20.21: icmp_seq=65 ttl=63 time=4.00 ms

64 bytes from 10.64.20.21: icmp_seq=66 ttl=63 time=3.96 ms

64 bytes from 10.64.20.21: icmp_seq=67 ttl=63 time=5.30 ms

64 bytes from 10.64.20.21: icmp_seq=68 ttl=63 time=1.69 ms

64 bytes from 10.64.20.21: icmp_seq=69 ttl=63 time=1.22 ms

64 bytes from 10.64.20.21: icmp_seq=70 ttl=63 time=119 ms

64 bytes from 10.64.20.21: icmp_seq=71 ttl=63 time=1.35 ms

UPDATE #2: After digging around for 2 days I finally tracked the issue down to my POE switch that all my wireless APs are connected to. The uplink cable on the switch was throwing errors for probably more than a year, which was causing random packet loss. It wasn't until I actually tried to do something latency sensitive like realtime RTSP streaming over wifi that I finally caught the problem. Anyways, this stream is solid now. No random dying and restarts.

Does this make the Wyse cameras usable with Octolapse? I'm a little in the dark on that part, I figure it should, since Octolapse is just grabbing whatever the webcam stream is kicking out, but would appreciate a slightly more informed second opinion.

I've got this nearly working using two different methods, both of which currently have some advantages and drawbacks, but both of them aren't working the way I'd hope they would just yet.

Here's a WIP guide I'm building for running multiple octoprint instances on a single server which has the ffmpeg restreaming method I started out with.

This method has two drawbacks:

- The cpu power required is pretty high unless you get HEVC encoding working, which I haven't managed yet

- The octoprint instance will load the first frame of the mjpeg stream only. Manually refreshing is required to get any further frames

The other method I'm testing now is using VLC

I'm still working on the systemd service units for this, but after installing vlc and vlc-bin packages you can run this command in a screen session to test it out:

cvlc -R rtsp://<camera_username>:<camera_pass>@<camera_hostname>/live --sout-x264-preset fast --sout="#transcode{acodec=none,vcodec=MJPG,vb=10000,fps=5}:standard{mux=mpjpeg,access=http{mime=multipart/x-mixed-replace; boundary=--7b3cc56e5f51db803f790dad720ed50a},dst=:8990/videostream.mjpeg}" --sout-keep

and then putting into the octoprint config:

http://<octoprint_hostname>:8990/videostream.mjpeg

Good news is that this stream does work as expected.

The issue I'm having with this method is that I cannot for the life of me get nginx's reverse proxy to work. Here's the config I set up for it:

location /webcam_printer0/ {

proxy_pass http://127.0.0.1:8990/videostream.mjpeg;

}

when I try to access the new url, nginx reports a 404.

Once I can get either of these methods working properly, I plan to build a plugin for Octoprint to make it easy and accessible to everyone.

I'm also hoping to incorporate Intel's QuickSync (HEVC) and have been poking around with the concepts in this gist