On the pi, the use of the omx GPU driver is essential in my opinion for this transcoding as well.

Well I've made some progress, at least in that I've figured out a few ways how NOT to make a lightbulb.

It seems that hardware transcoding API's cannot make the conversion from h264 to mjpeg directly. Therefore, the best way forward is to embed an HLS or other browser-native video format instead of relying on mjpeg.

I think what I'll end up needing to do is utilize one of @jneilliii plugin that allows using an iframe, and figure out the best way to get h264-HLS transcoding working with the hardware API's

The other option is to replace the background image on the control tab with a video tag with the H264 based stream URL. I vaguely remember playing around with that years ago, but because the pi's transcoding was still fairly slow/choppy I gave up on it. I might still have that code laying around somewhere, will have to check.

Now that I'm thinking about it, some web browsers I believe will load the H264 URL within an iFrame, so that WebcamIframe plugin might actually work out of the box. Doing a quick test now.

Edit: So that does work using the Iframe, but the video doesn't auto-start so it's not like live streaming. Could probably get around that by using a video tag instead with autoplay option enabled like this...

$('#webcam_container').replaceWith('<video width="560" controls autoplay><source src="http://commondatastorage.googleapis.com/gtv-videos-bucket/sample/BigBuckBunny.mp4" type="video/mp4"></video>');

that's excellent! Any chance you might be able to explain in a little more depth how you implemented that test?

I opened my octoprint page in chrome, then press f12 to open the developer page, then copy and paste that line into the developer console and hit enter.

gotcha, so I'll have to figure out how to modify the actual page source to use a new URL like that. Another thing that I need to make sure this works with is the android app Printoid, but that'll have to come later. As long as I can monitor the print from a webpage I can live without app integration.

A similar approach of replacing the image is done in the WebcamIframe plugin. You would just have to modify it to use the video tag code instead of the iframe tag code. It currently just pulls the url from the webcam settings, but you could modify the plugin to utilize it's own separate url field with minimal modifications.

Awesome, thanks for the breadcrumbs! I'll look at that plug-in and work on a modified version specializing in RTSP, and I'll keep this thread updated with developments as I go

I'd be glad to help if you need it. I think the trick relative to RTSP is there isn't direct support in HTML5 video tags, so you have to do some trickery to sort it all out, either with transcoding to another format/container or go with a completely separate embedded viewer that utilizes websockets if I remember correctly from all the reading I did on it before. It was a very big rabbit hole, but from best I can tell, HLS and DASH seam to be the current reliable streaming options. We also looked into WebRTC, but that seems to be more geared towards video conferencing type solutions rather than just send me some video.

Edit: WebRTC discussion over here.

ok then I was already looking into the right things. I already have a solution that is able to transcode from RTSP to HLS, however it's still very CPU intensive, so I'm working on building a version of ffmpeg from source that will allow me to offload transcoding to VAAPI/QuickSync hardware.

So yeah that's the first thing I'll need to set up. Sadly the majority of guides I've been reading up on were published before a major change in ffmpeg that made a bunch of the include flags no longer work, so it's been hairy trying to pick that all apart.

Here's the gist I've been working from, for context

At the moment that's still not working because of the dependency and build configurations no longer being up to date

Edit: I finally managed to build a version of libav and ffmpeg from source with VAAPI enabled, and got an HLS stream writing to a file, but not sure, yet, if it's working as expected.

ffmpeg -vaapi_device /dev/dri/renderD128 -i "rtsp://username:password@cameraIP/live" -vf 'format=nv12,hwupload' -c:v h264_vaapi -preset veryfast -c:a aac -b:a 128k -ac 2 -f hls -hls_time 4 -hls_playlist_type event "/tmp/stream.m3u8"

I did notice that the CPU usage on this is till pretty high ~28%, but it's certainly way better than the 61-75% utilization I was getting before

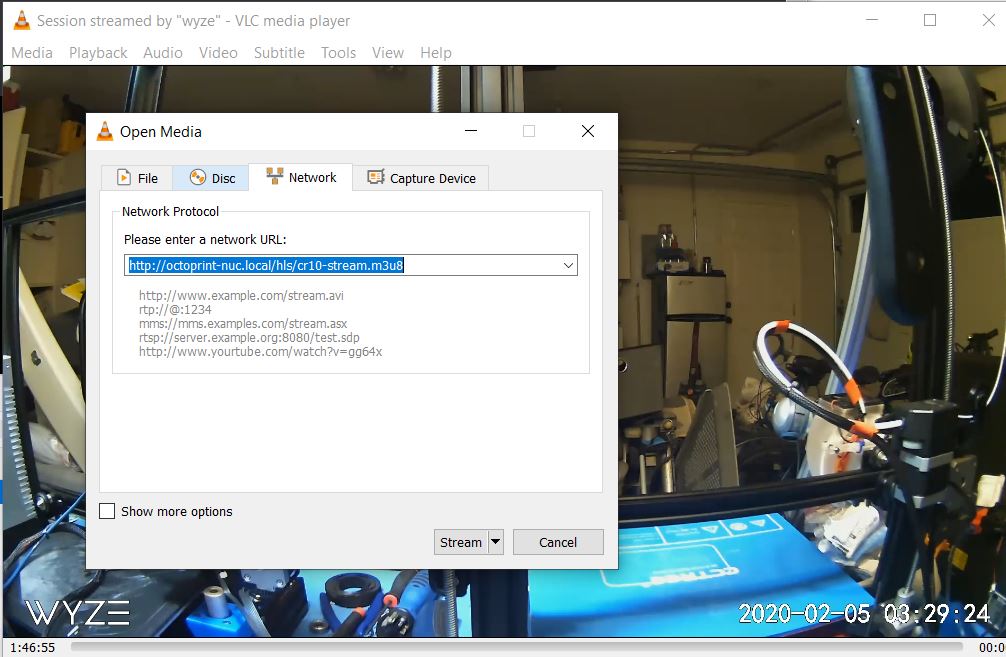

Well I got 95% of the way there. I now have a workable m3u8 playlist that can be streamed using vlc, and a supposedly working nginx config to back it up, but I have a feeling I'm missing a trick here.

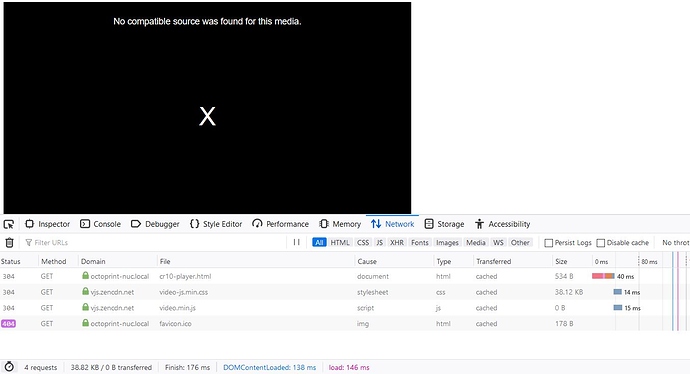

I'm using this guide for setting up a streaming server through nginx. It's not at all comprehensive, so it took me a bit to get it going, but I managed to get almost everything working, except that the videojs application keeps complaining that the video format is incompatible. pics attached

I made a fork, and made some decent progress! managed to get the player to show up with a little loading spinner. Still need to do some debugging, but it's progress! You should be able to install it from the link in the readme to test

edit:

I managed to get a static html page to play the stream successfully, but adding this code to the plugin just produces a missing div

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>Live Streaming</title>

</head>

<body>

<script src="https://cdn.jsdelivr.net/npm/hls.js@latest"></script>

<video id="video"></video>

<script>

if(Hls.isSupported()) {

var video = document.getElementById('video');

var hls = new Hls();

hls.loadSource('https://octoprint-nuc.local/hls/cr10-stream.m3u8');

hls.attachMedia(video);

hls.on(Hls.Events.MANIFEST_PARSED,function() {

video.play();

});

}

</script>

</body>

</html>

and here's the line in the plugin. It's been js minified to fit on one line

$('#webcam_container').replaceWith('<script src="https://cdn.jsdelivr.net/npm/hls.js@latest"></script><video id="video"></video><script>if(Hls.isSupported()){var video=document.getElementById("video"),hls=new Hls;hls.loadSource("' + webcamurl + '"),hls.attachMedia(video),hls.on(Hls.Events.MANIFEST_PARSED,function(){video.play()})}</script>');

Edit2:

Apparently I was mistaken about the static page playing. It shows one frame only, not continuous playback.

I think my first implementation seems to be working best, even though it's still not showing video:

$('#webcam_container').replaceWith('<video width="588" controls autoplay><source src="' + webcamurl + '" type="application/x-mpegURL"></video>');

Edit 3:

Ok so I actually figured out that the stream does work on mobile browsers, Safari, and M$ Edge (the old one before switching to chromium), but not on chrome or Firefox.

So it looks like it's a native browser video playback issue at this point, so I really just need to figure out a proper is container that can live and work under octoprint.

I'm also going to look into transcoding to Dash and see if that works any better

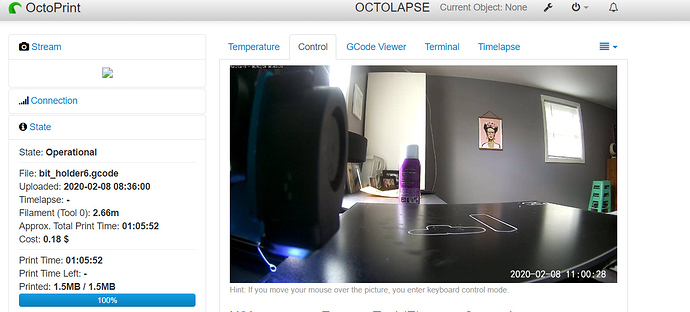

I managed to get my wyze cam to display in octoprint using a zoneminder docker, which I have running on my unraid server. After adding your camera's rtsp link into zoneminder, you can retreive a link that is usable by octoprint by clicking the "montage review" tab in zoneminder, then clicking on your video stream, which will open your stream in a new window. Right click on the stream, and copy the image link, then paste it into octoprint's stream link field. A bit of a hacky workaround, but it works.

Zoneminder is basically doing what I did, just with a really old build of ffmpeg and outdated software. It'll work, but it's not as efficient. I'm working on building a comprehensive guide on how to do this the "right" way, and eventually I'll have an automated script and octoprint plugin to work with

If you need any help with the plugin let me know.

I think I will need help yes. I want to be able to pass in parameters to the ffmpeg execution script, like scale size, bitrates, etc

I'm currently using a systemd unit to handle ffmpeg, but if it can be controlled and launched from the plugin itself, that would be ideal.

of course building ffmpeg from source also makes things more complicated

There's a couple of ways to do that. If you look into the webcam streamer plugin, or my YouTube plugin they utilize dockerized containers of ffmpeg and pass parameters the other option would be running directly in a separate thread. I think sarge.run with the async flag set to true. Might want to check out octolapse, I think it uses this approach or similar.

I haven't worked with docker very much, is it possible to have a properly built version of ffmpeg with all the options necessary deployed in a container?

I'll take a peek at those other plugins' source and go from there

I'm not super familiar with it either, but that's what it's made for. Having pre-built containers based on processor architecture. Definitely look at webcamstreamer one. He made docker containers for both arm and x86 if I remember correctly.

Eroji, I've been using your docker container, but after some time streaming I get an error stating that I am exceeding the 10000kbps limit. I saw there was a limit in the ffmpeg.conf file, which I increased to 20000kbps, but it doesn't seem to update the limit in the container, since I am still receiving the same error. Any tips? I've restarted the container, docker, and even the physical host with no luck.